Although no international guidelines on AI exist, the EU is way ahead in policy formulation with a very clear view of what good looks like. JWG research has revealed major control gaps to other jurisdictions and draconian penalties for those that don’t comply in 2023. In preparation, we will be developing detailed business use cases together with key stakeholders from across the many non-financial risk management tribes in order to map out a path forward this autumn.

Europe’s dash for AI gold standards

The EU’s forthcoming rules on Artificial Intelligence are cross-sector with the biggest implications to global firms. Monetary penalties of up to €30M or 6% of annual revenue for breaching risk guidelines will be assessed for the highest risk category (e.g., vulnerability exploitation, trustworthiness of natural persons).

On track for implementation in 2023, it has a very broad definition of AI which will scope in everything on a traders’ desktop and a good part of operations staff applications as well. The excel macros and search tools will need to be examined carefully.

It proposes a risk-based framework focused on the human and ethical implications of AI which it buckets into to four categories of risk. Worryingly for FS business owners, the high risk category includes: biometrics, creditworthiness and credit scoring.

The regulation lays out new controls, documentation and certification including:

- Development steps and general logic of the AI

- Description of the system architecture, training, validation

- Data requirements, provenance, scope and cleaning

- Human oversight measures

- Monitoring or continuous compliance.

High-risk AI systems will be registered, and the EU will maintain a database including information provided by AI system providers including a conformity certificate.

Global principal baselines

JWG has been busy capturing 300+ policy documents this year as leading regulators lay out important control requirements for technology and operations. While the EU has presented the most comprehensive policy to date, we have found new obligations popping up in other jurisdictions.

This means that non-financial risk and compliance experts will have a patchwork of obligations to assemble a global standard. Over the course of the last 2 months, we have collated 115 AI policy documents from 2016-2021 from 12+ jurisdictions and created 10 benchmarks which describe the global baseline.

We have discussed the key deltas by region with our Trade Surveillance workshop to better understand the impact of variance away from baseline requirements. For this discussion we organised the AI control benchmarks into four broad buckets:

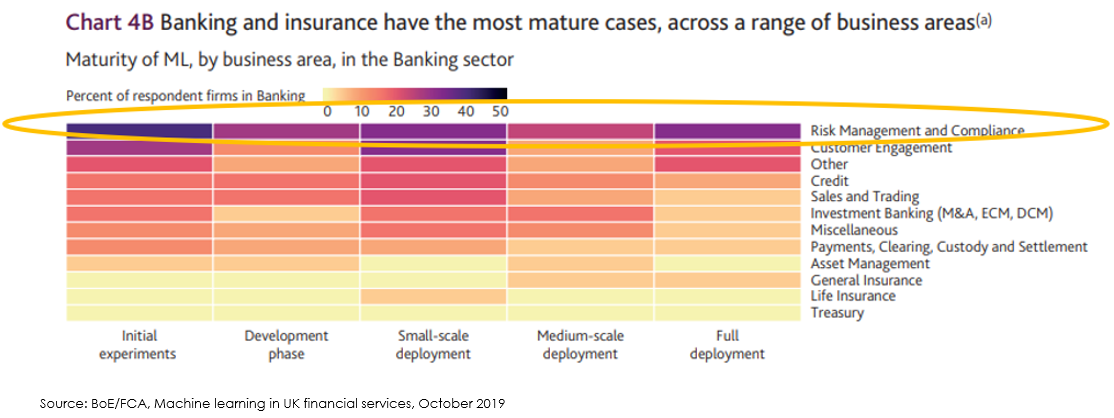

- Business use. Transparency of AI’s use and proportionality is a big focus. Regulators expect firms to weigh the intended use against the cost and to inform the customer when AI is being used. The UK, US, Netherlands and Hong Kong are generally on par with the global baseline with the EU pushing way ahead in transparency obligations. In addition to any application involving a customer, this could have big implications on risk and compliance applications which a Machine Learning survey from the Bank of England and FCA found to be the top users of Machine Learning

- Model. Regulators have focused on new fairness, data quality and data protection controls. Keen to avoid discrimination, an outcomes-based measuring stick will be used when evaluating the quality of the code written and the data used. Hong Kong, the Netherlands and the UK are at the front of the pack in Europe in establishing standards which include anonymisation and disclosures with a data governance framework

- Oversight. A desire for supervision and knowledge of AI system performance has led to obligations for model governance, accountability and expertise. Organisational control standards and staff qualification will need to be documented, trained, monitored, audited and reviewed throughout the lifecycle

- Risk management. Formal requirements to minimize AI risks have been mandated as a safety blanket for global regulators. Governance of the robustness of an AI system, cyber security and third-party risk management ensures that senior management as well as suppliers remain under control, and accountable. UK, EU, US and Hong Kong have been taking the lead in vendor management controls and conformity assessments.

Top gaps and control needs

Given the extent to which AI has been deployed within financial services it will be important for firms and their suppliers to come to agreement on an AI control framework globally.

In addition, these AI rules will need to be integrated with local market practice and law – some of which are also in the process of being updated (e.g., MiFID 2 algo and high frequency trading).

Europe’s policy will be an important input to the control framework, but other jurisdictions which are less aligned with the EU’s AI values will need to be taken into account. Clarity on how discrimination is defined and appropriate performance shared with regulators and customers is required up front.

In general, Europe’s principles are far broader in scope than current regulations contemplate. Firms have struggled to implement these more narrow requirements to classify, agree appropriate behaviours, test and monitor algo activity today.

Extending these types of obligations to take into account a wide variety of factors that are unique to an individual consumer will be challenging. A large amount of activity will need to be reviewed and positions taken on what new controls and disclosures to clients and employees are appropriate as illustrated in the analysis below from the Bank of England.

Path forwards

Creating an appropriate global control framework will need to involve stakeholders from across the many factions of non-financial risk management.

Data, operational risk, technology risk, record keeping and 3rd party risk tribes will need to come together to develop meaningful standards that integrate with their current maturity models (e.g., DCAM).

JWG will continue to frame the holistic view of regulatory AI principles and look for opportunities to test existing controls.

Our trade surveillance group will reconvene in September to discuss the development of AI use cases which take account of a whole slew of new 3rd party risk management obligations from across the globe.

We will be developing detailed business use cases and working with key stakeholders from across the many non-financial risk management tribes to map out a path forward this autumn.

Please let pj@jwg-it.eu know if you would like to join in.

Additional resources: