On June 20, 2023, PJ Di Giammarino, CEO and founder of JWG and Matt Caine, Director of EMEA Financial Markets Communication Compliance at NICE held a discussion on ‘Navigating the Winding Regulatory Road of Communication Compliance’ with a group of Financial Services Compliance and IT experts at the NICE Interactions 2023 conference in London.

During the discussion, they talked about the recent record-keeping fines implemented by the CFTC and SEC in the United States and what these fines mean for the industry. As regulators continue imposing fines for lapses in communications monitoring, the industry struggles to define what ‘good communications surveillance looks like’. Fortunately, there is a pathway that can be delivered via opensource collaboration.

Overview

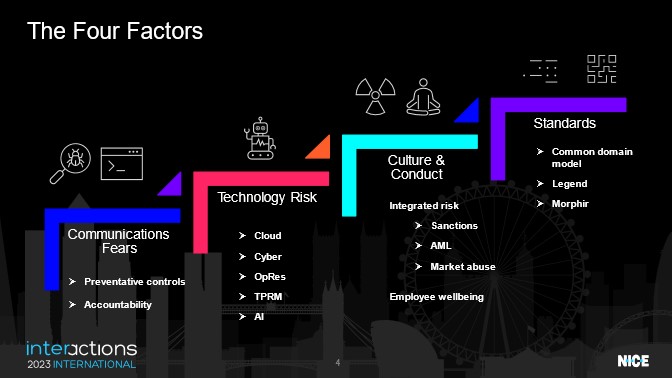

Over the last four years the industry has seen a seismic shift in working patterns, environments and technologies within financial institutions, client interactions and personal life. The diagram below guided our conversation of the four factors that communications surveillance professionals should be taking into account in 2023.

The compliance world has been abuzz since December 2021, as federal US regulators have issued approximately $2B in fines for recordkeeping failures. To quickly summarize:

- December 2021: the first fine – 1 bank, $USD 200m total

- September 2022: 15 firms, USD $1.8bn total

- May 2023: 2 banks, < $USD 50m.

Matt: What is the underlying regulatory message from these fines?

PJ Fines grab management attention, but what they want to know is what was the root cause, and could it be a problem for us?

Al Capone didn’t go to Alcatraz just for tax problems. Record keeping is often a symptom of more fundamental root cause issues. It may well be that there were suspicions that market behavior was not in line with expectations, but it couldn’t be proven other than the absence of an audit trail.

In the US fines, regulators singled out compliance officers who did not follow their own policies and the business owners who knew they were acting outside of the boundaries of the policy.

I think what’s happening here and more broadly, is that the nature of what good policy, procedures and controls look like is changing.

Matt: Do you think that there’s more technology risk for a firm today?

PJ Yes, the reality is that the procedures that touch various parts of financial service organisations’ infrastructure aren’t as black and white as they should be to meet regulatory expectations. Policies tend to be fairly high level, so while senior management is usually aware of them, they don’t actually have the tools to ensure that these policies are being met.

This creates a problem for the jurisdictions like the UK and now Ireland that are implementing accountability regimes which require senior management to sign off that they’ve identified, recorded and monitored regulated employee conversations.

Matt: When you say the policies aren’t black and white, can you elaborate just a little bit more on that?

PJ I think this gets into how do you assign accountability for spotting the outliers? There may be a high level communications policy it is unlikely to get to a sufficient level of detail to demonstrate that the senior managers we able to control it. For example, how do I know Magnus is having some conversation with the guy on the yacht that we’re not allowed to do business with right now on his personal device that sometimes he uses for work? Sanctions, AML, Consumer duty and a variety of other obligations make it very, very tricky for somebody to manage that interaction in an appropriate manner.

Matt: That’s interesting because technology is one component, which naturally leads to what you just touched on there. We also think about culture and conduct, which includes personal device usage. How would you see this impacting an organization’s ability to ensure compliance?

PJ Firms need to take a hard look at the boundaries they draw around personal digital space for their workforces. Employees don’t necessarily want all their data to be collected, analysed and run through the same filters that work channels are subject to.

If we’re going to be recording every single client meeting or Zoom call and feeding that through various algorithms to spot conduct and behavior issues, will they keep doing business with us?

New technology rules will soon force firms to spell out what they are doing with communications surveillance much more clearly. The EU AI Act classifies certain monitoring as ‘high risk,’ which means the technology risks needs to be well understood, documented, monitored and declared to the AI authorities.

Europe’s new Digital Operational Resilience Act (DORA) will also require firms to manage their technology supply chain much more closely with third parties under much tighter contracts.

One message to take away from today is that regulations have gone far beyond the ‘who trades what?’ and moving to ‘the how’ which we describe as managing digital infrastructure risk. That’s tricky for communications, especially when you get all these new channels across platforms that need to adhere to a common set of policies.

Matt: It all starts with capturing the relevant communications channels, right, because that’s the starting point of all of this. And with regulated employees utilising many different communication channels, these are just the building blocks of the of the whole compliance story. What do you see is the next phase of the compliance process in terms of adopting standard technology that looks beyond just the pure capture piece?

PJ: Great question. We analyzed the Financial Markets Standards Body’s latest ‘3 lines model’ last week, which discusses some of the key risk management issues. I recommend that this community have a look at the section on data process.

It highlights that to do risk management right, you need to take all the different risk data silos and bring them together into a ‘golden source’.

When integrated, communications risks quickly become about how outliers are spotted. Do we have the context to know whether the trade is a wash trade, or if the counterparty had a conversation referencing a sanctioned individual? It all gets back to how we tag and contextualize the data we have in the first place.

I would expect more feedback loops as you start to bring in more communications channels and work out what data we really need and how can it be tuned to meet business needs. Having trusted technology that can manage and analyze this data can simplify the process.

Matt: What do you see as being necessary in a communications compliance or surveillance programme today and what that might ‘good’ look like maybe in three- or four-years’ time?

PJ: Rather than merely observing that some pattern of behavior differs from the norm, control rooms should focus on getting more contextual data to filter out the false positives and spot the transactions which don’t look right for the context in which they occur. Implementing surveillance solutions that can ingest, monitor, contextualise and analyse this data can help Compliance personnel reveal the true meaning of conversations and spot risks with much less effort.

To get there, however, we have some modelling to do. The industry will need to extend the Common Domain Model from the current compliance deployment in derivatives regulatory reporting to the surveillance arena.

It’s a fantastic example of opensource collaboration in action. When it works, you can actually get code and data to test that you are picking up compliance obligations correctly.

JWG are currently testing the appetite to extend the CDM into risk management. Let us know if you’d like to get involved!

Matt: You just brought up AI, and we’ve heard about AI a lot today in the main sessions. What are you expecting to happen in that space?

PJ The EU AI act is so prescriptive that everyone in this room is going to have to start paying attention to what type of data processing they are doing and to what extent it is strays into the new definition of risky artificial intelligence. It’s part of the overall regulatory conversation about ‘the how’ and if you’re using a model that might potentially cause some harm, you’re going to have to recognize the risks up front, manage your relationship with data and systems providers, and ensure your board has sufficient controls in place and that these controls are declared to the regulators, employees, and customers.

But if you think about the job that most people in the room here do, it’s not that different than what a security agency does. I’ll be keeping a watchful eye on how the politicians define the boundaries of their new AI rules as it applies to financial services and surveillance.

Matt: Thank you PJ and thank you to this great audience. We look forward to continuing our conversation.

See ‘RegTech surveillance: breaking silos with digital models’ here for more JWG analysis.